Study Results

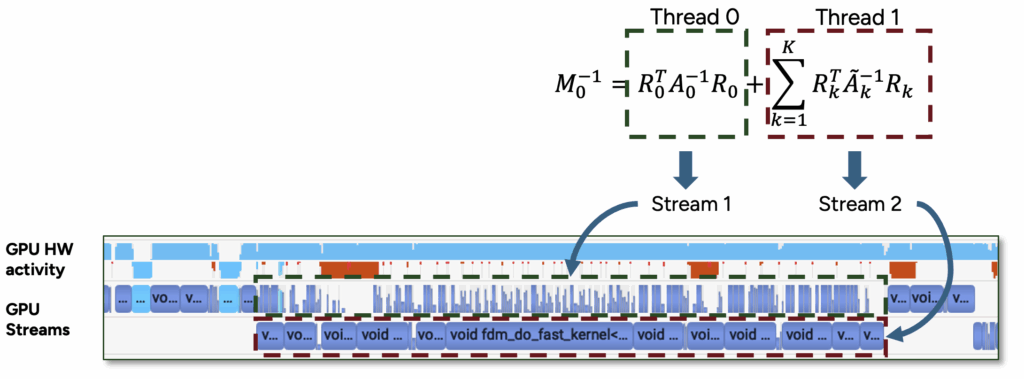

The goal of the STRAUSS study was to enable efficient and accurate CFD simulations on current and future exascale systems. The project successfully developed and integrated a new scalable task-parallel multigrid solver into the Neko framework. The solver increases GPU utilization by overlapping independent tasks and reduces synchronization penalties through direct device communication.

The new approach eliminates the need to artificially inflate problem sizes to achieve GPU saturation. This allows high-fidelity simulations at physically meaningful scales to strongly scale across large HPC systems. Benchmark results demonstrate that the solver can maintain performance at smaller element counts per GPU, effectively reducing the required workload per accelerator while retaining strong scalability. The implementation is open source and part of the Neko codebase, allowing seamless adoption within the European CFD and exascale computing community.

Benefits

CFD is foundational in industries such as automotive, aerospace, and energy, and plays a crucial role in understanding turbulence—a major contributor to global energy consumption. The ability to simulate turbulence accurately and efficiently can lead to innovations such as drag reduction, improved fuel efficiency, and optimized designs in wind turbines and aircraft. Even modest gains in turbulent flow control can have billion-dollar impacts and significant environmental benefits through reduced CO₂ emissions.

The algorithms developed in STRAUSS are particularly relevant for exascale CFD workflows. They allow Neko, and potentially other high-order frameworks like NekRS, Deal II, and Nektar++, to operate efficiently on GPU-based architectures. The solver’s general design also allows for integration into a wider range of matrix-free, high-order solvers.

Beyond direct implementation, the methods developed here could inform best practices for porting and optimizing scientific codes for modern accelerators. They may serve as a template for GPU-centric solver design across multiple scientific domains. Furthermore, the improved scalability makes STRAUSS a strong foundation for a future ACM Gordon Bell Prize submission, building upon previous nominations by the Neko team.

Partners

| The PDC Center for High Performance Computing, KTH Royal Institute of Technology (Sweden) at KTH is one of Sweden’s premier high-performance computing centers, providing cutting-edge computational infrastructure and expert support for research across scientific domains. As a core partner of the National Academic Infrastructure for Supercomputing in Sweden (NAISS), PDC contributes to national and European HPC initiatives, facilitates scientific software development, and enables scalable solutions on state-of-the-art systems. |

| National Academic Infrastructure for Supercomputing in Sweden (NAISS) is Sweden’s national organization for academic high-performance computing, supporting researchers with advanced computational resources, data services, and expertise. Hosted at Linköping University, NAISS operates Sweden’s largest supercomputers and collaborates closely with regional centers such as PDC at KTH to drive innovation in HPC and scientific discovery. |

| Ludwig Maximilians Universität Munich Seismology expert. Earthquake modeling and design of Bayesian inference problems. |

Team

- Dr. Tuan Anh Dao

- Dr. Xavier Aguilar

- Dr. Niclas Jansson

Contact

Name: Dr. Niclas Jansson

Institution: KTH Royal Institute of Technology

Email Address: njansson@kth.se