Study Results

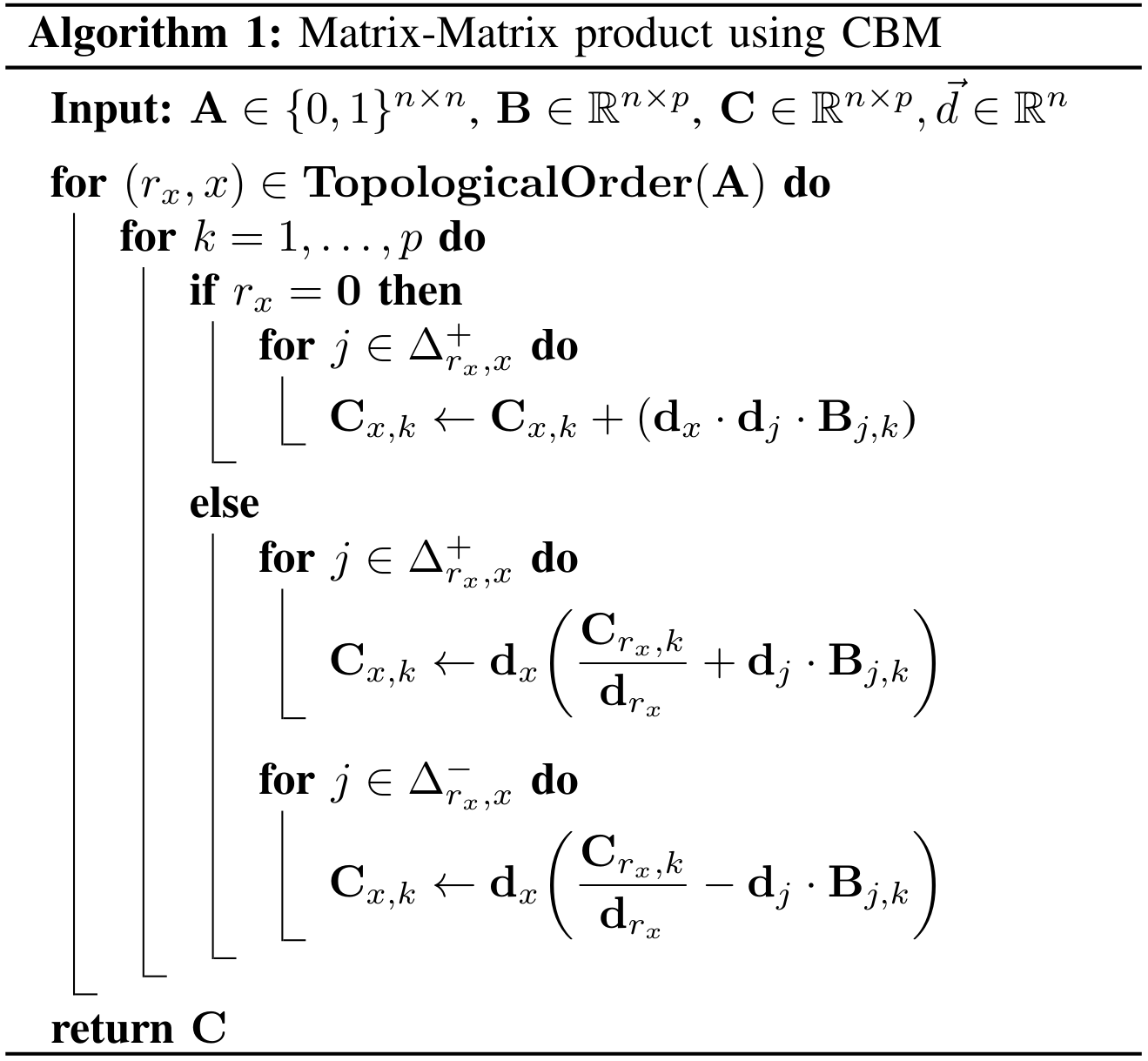

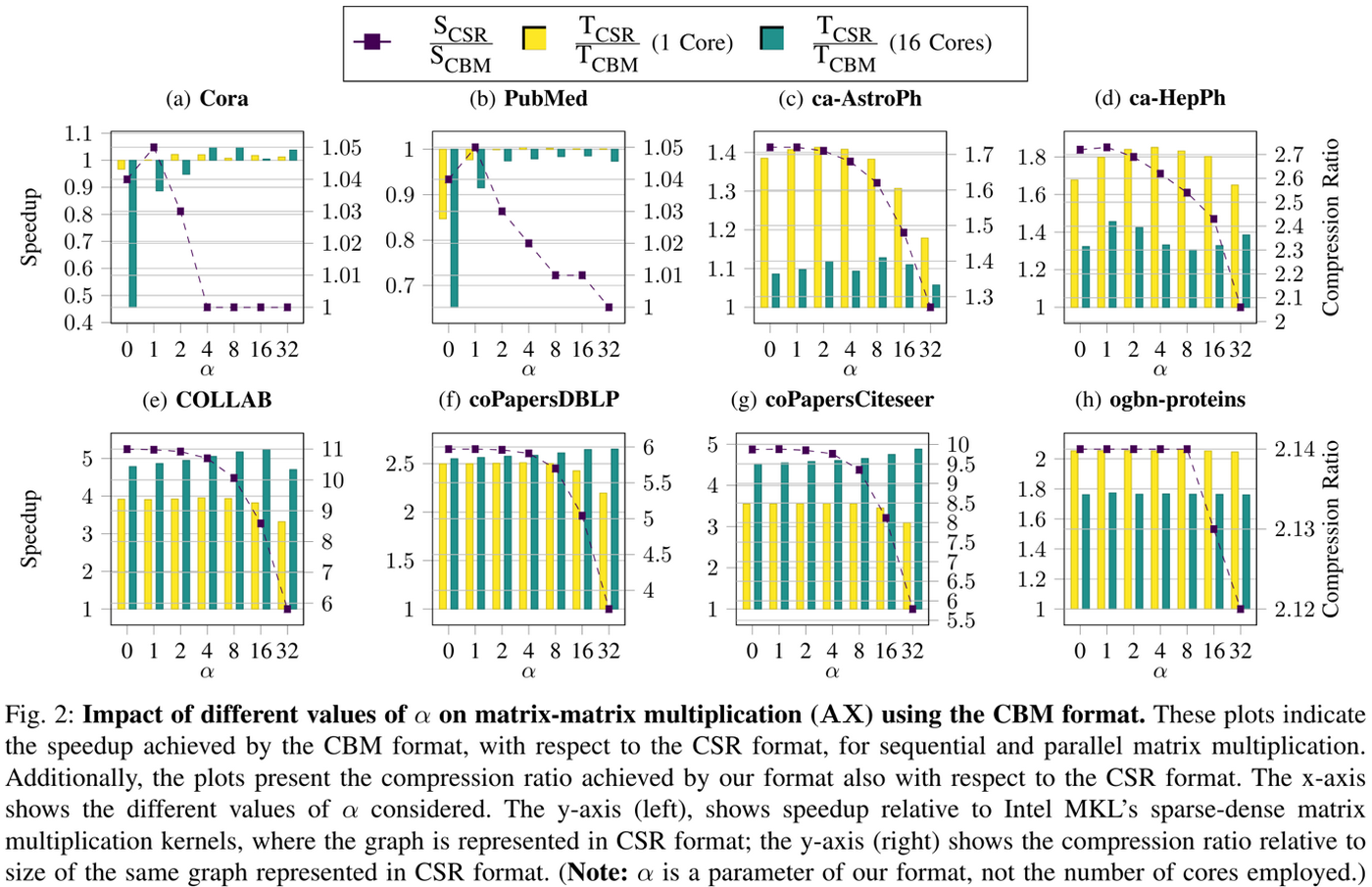

The study successfully implemented the CBM format for compressing sparse Boolean matrices and developed the CBMM kernel for efficient matrix multiplication. The implementation supports both sequential and parallel execution on CPUs and GPUs and was integrated into the PyTorch framework. Experimental evaluations demonstrated that the CBM format achieves significant memory savings, reducing the memory footprint of real-world graphs by up to a factor of 11. The CBMM kernel achieved speedups of up to 5× over Intel MKL and up to 2.6× over NVIDIA cuSPARSE. In the context of GNN applications, the use of CBMM led to performance gains of up to 3× for both training and inference phases, showing strong potential for improving time- and energy-to-solution in real-world AI workloads.

Benefits

The CBM format and CBMM kernel enable significant improvements in the efficiency of sparse-dense matrix operations, especially those central to GNN workloads. These improvements are achieved without sacrificing model accuracy and are expected to accelerate key stages in AI pipelines across scientific and industrial applications. For example, in computational biology, the method can be used to accelerate tasks such as drug-target interaction prediction by reducing memory usage and computation time. The format is inherently parallelizable and future-proof, as further optimizations in underlying libraries like MKL and cuSPARSE will also benefit CBMM. In a broader context, GNNs are essential for tasks ranging from social network and power grid analysis to recommender systems and natural language processing. The efficiency gains enabled by CBM/CBMM make these workloads more scalable and energy-efficient, facilitating deployment on extreme-scale HPC infrastructures.

Partners

| INESC-ID, affiliated with Instituto Superior Técnico (IST) of the University of Lisbon, is a leading Portuguese research institute in computer science and electrical engineering. Its Information and Decision Support Systems (IDSS) Lab specializes in algorithm engineering, data science and engineering, and information systems engineering. Notably, IDSS work in algorithm engineering encompass contributions on succinct data structures, bioinformatics, computational biology and machine learning. |

| The Scientific Computing Group at the University of Vienna, led by Prof. Siegfried Benkner, focuses on software solutions for high-end computing systems, including multi-core servers, clusters, supercomputers, and cloud infrastructures. Their research covers programming paradigms, compilers, runtime systems, and software development environments aimed at solving complex compute- and data-intensive problems in science and engineering. The group operates within the Vienna Scientific Cluster (VSC), a collaborative supercomputing infrastructure, and is preparing for the deployment of MUSICA, Austria’s next-generation supercomputer expected to deliver approximately 40 petaflops of performance |

Team

- Tomás Almeida

- João N F Alves

- Siegfried Benkner

- Alexandre P Francisco

- Rodrigo Moreira

- Arlindo L Oliveira

- Luís M S Russo

- Cátia Vaz

Contact

Name: Alexandre Francisco

Institution: INESC-ID Lisboa

Email Address: aplf@inesc-id.pt