Study Results

We designed, implemented, and tested four asynchronous linear solvers. One of them , the Asynchronous preconditioned conjugate gradient (aPCG), showed impressive performance in the industrially relevant application of simulating gas turbine combustion.

We tested aPCG in OpenFOAM on a small version of the DLRCJH combustor(24 million cells), one of the grand challenge cases set up during the exaFOAM EuroHPC project. The tests were conducted on two EuroHPC machines, LUMI (using up to 128 nodes = 16,384 cores) and MareNostrum (using up to 64 nodes = 7,168 cores. The DLRCJH combustor case is a transient computational fluid dynamics simulation using a large eddy turbulence model. It is publicly available from the OpenFOAM HPC repository https://develop.openfoam.com/committees/hpc/-/tree/develop/combustion/XiFoam/DLRCJH

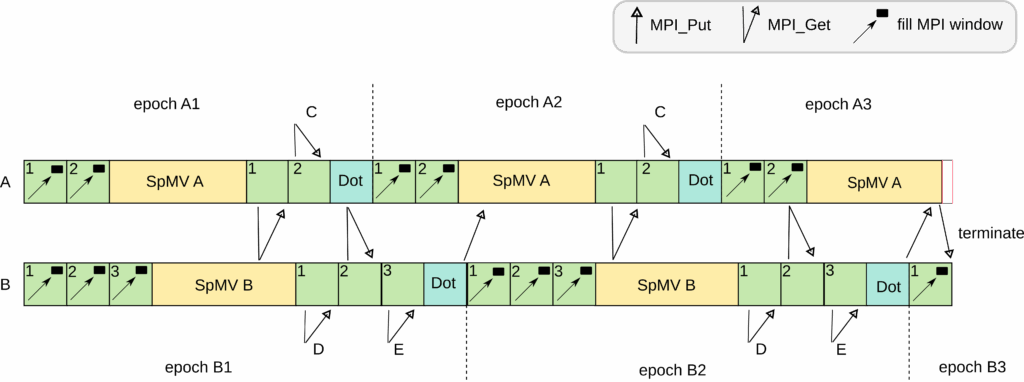

The figures show strong scaling results on LUMI and MareNostrum, respectively, where aPCG always outperforms PCG. It can be seen that the most efficient case for PCG is achieved by running on 8 nodes whereas aPCG runs most efficiently on 16 nodes. aPCG shows a 3.1x improvement in the time-to-solution and a 1.6x improvement in the cost-to-solution as compared to PCG.

Our research relies on two software Domain Specific Languages (DSL) tailored for scientific computing, OpenFOAM and FreeFEM. They are open source and have been available for at least 25 years. They are both written in C++/MPI and aim to give the end-user easy access to high level concepts even in a parallel environment. OpenFOAM is especially suited to Computational Fluid Dynamics with its Finite Volume discretisation. FreeFEM is a multiphysics toolbox for scientific computing with Finite Element and/or Boundary Element discretisations. Both codes enable the use of the PETSc library. FreeFEM also provides access to the parallel domain decomposition libraries ffddm https://doc.freefem.org/documentation/ffddm/index.html and HPDDM https://github.com/hpddm/hpddm .

Benefits

The newly developed algorithm aPCG is asynchronous from start to end. It eliminates all synchronisation (communication) barriers and thereby enables more efficient utilisation of the computer systems. Additionally, it facilitates the utilisation of energy-efficiency features offered in upcoming-generation hardware. This is an important step towards the cost-effective and green use of the new generation of exascale supercomputers. It will, for example, impact computational fluid dynamics which is of paramount importance for developing energy efficient technologies as well as new means of energy production. The codes will be made publicly available under an appropriate GPL license through https://github.com/Wikki-GmbH and https://github.com/FreeFem.

Partners

| Alpines team is a joint team between the applied mathematics laboratory Laboratoire J.L. Lions at Sorbonne University and INRIA (National Institute for Research in Digital Science and Technology) Paris, France. The research activities are focused on parallel scientific computing. It also develops the free finite element software FreeFEM https://freefem.org/ (FF) with at least 2k regular users. Within AMCG, they served as an HPC centre, HPC expert, software development expert and application expert. |

| Wikki GmbH, Wernigerode, Germany is an SME engineering company, specialized in innovative numerical simulation techniques, especially for CFD and OpenFOAM (https://www.openfoam.com/). Within AMCG, they served as an HPC expert, software development expert and application expert. Involved in this project were Dr. Henrik Rusche and Mr. Ranjith Khumar Shanmugasundaram. |

Team

- Prof. Frédéric Nataf

- Dr. Lukas Spies

- Dr. Pierre-Henri Tournier

- Dr. Henrik Rusche

- Mr. Ranjith Khumar Shanmugasundaram

Contact

Name: Dr. Henrik Rusche

Institution: Dr. Henrik Rusche

Email Address: h.rusche@wikki-gmbh.de